Nvidia, Intel, and AMD have their own solution and what Xilinx brings is a PCIe machine learning card that has an adaptable programmable engine. Victor Peng, the CEO of Xilinx, showed that the Amazon AWS owned Twitch can accelerate 1080P 120 FPS for streaming to millions of customers using the Xilinx Zinq ultrascale existing 16nm product.

Twitch simply used the Zinq programmability part to run VP9 codec on it and get incredible performance and companies like Nvidia or AMD simply cannot change their hardware. Nvidia solutions are great for training but Xilinx Alveo may well win in latency simply because of a different architecture. The Alveo card was introduced by Peng, and we had a chance to see the card up and running and talk to some early adopters who already havine the cards and running their programs on it.

Now Xilinx has the PCIe based card and has showcased that in partnership with AMD EPYC platform could even beat some world records. The record in inference performance shows GoogLee Net scoring 30.000 picture per second with eight Xilinx Alveo U250 accelerator cards.

IBM also has a 922 Power system with an Alveo card as Big Blue claims that it has better inferencing scores using Xilinx while Nvidia Volta does a better job in training. So Inference customers will go after Alveo based IBM machines as that will happen faster and with lower latency.

Xilinx has two cards available today and will ship them in the next few weeks. The U200 has 892K look-up tables LUTs, 35 MB internal SRAM capacity, 31TB/s internal SRAM bandwidth and is capable of 3100 images/second CNN (Convolutional Neural Network ) throughput. Have in mind that CNN uses low latency GoogLeNet V1.

The faster version called U250 has 1351K look-up tables LUTs, 54 MB internal SRAM capacity, 38TB/s internal SRAM bandwidth and is capable of 4100 images / second CNN (Convolutional Neural Network ) throughput.

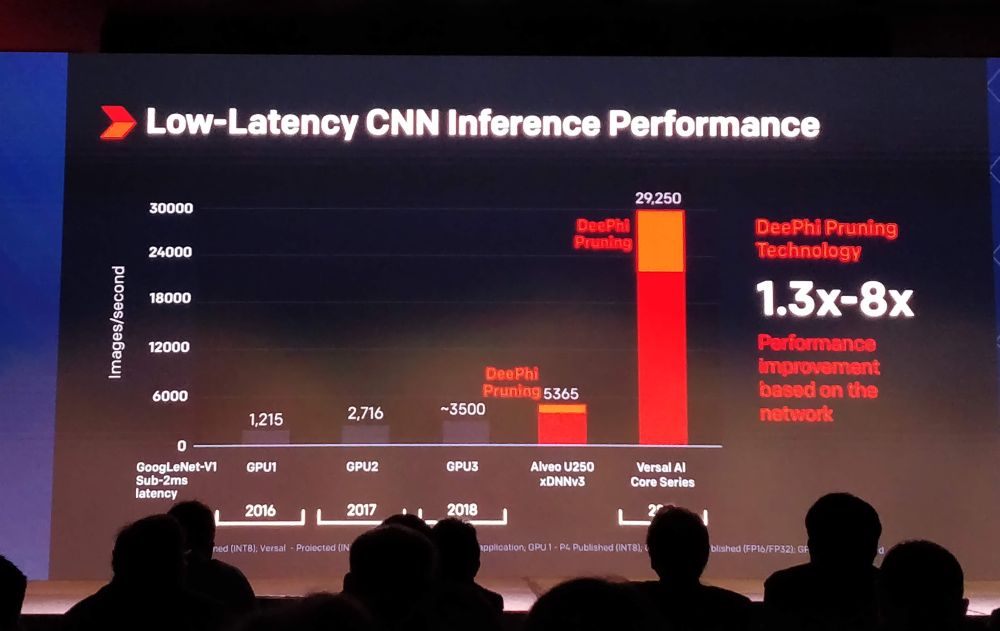

There is a passive and active card version and you can test the cloud version of the card using Nimbix today. Passively cooled AlveoU200 Data Center Accelerator Card is selling for $8,995 while Alveo U250 Data Center Accelerator Card is selling for $12,995 with a lead time of four weeks. In DeePri xDNNv3 the card with optimization can score even 5365 images/sec, definitely faster than 3500 what you can expect from Nvidia 2018 Volta GPU.

Xilinx is promising up to 90X higher performance than CPUs on key workloads at 1/3 the cost and over 4X higher inference throughput and 3X latency advantage over the GPU-based solution. This has to be tested but is definitely significant lead over the competition.